Image Variants : Only 90 Lines of Python

- Nov 23, 2024

- 2 min read

I saw a video that points out that the code of most 'AI' image generators is actually so basic it's almost fraudulent that companies are trying to sell it. Well, that may or may not be true, but I don't like to pay for Credits for anything, so I wondered what would happen if I asked ChatGPT to provide python code for creating image variants using freely available libraries locally. And ... it worked. Only 90 lines of python script. Admittedly the results are very low grade, but it's free and local. The model pipe is StableDiffusionDepth2ImgPipeline.from_pretrained( "stabilityai/stable-diffusion-2-depth"

These are the libraries needed in the Python environment, added using CMD utility in WIndows:

pip install torch pip install diffusers pip install Pillow pip install numpy pip install matplotlib pip install opencv-python pip install timm Here is the script (with usual disclaimer that it's for fun and study only and if you use it that's on you).

Silly Image Generator [Python]

import os

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

import cv2

TORCH_AVAILABLE = False

DIFFUSERS_AVAILABLE = False

TIMM_AVAILABLE = False

try:

import torch

TORCH_AVAILABLE = True

except ImportError:

pass

try:

from diffusers import StableDiffusionDepth2ImgPipeline

DIFFUSERS_AVAILABLE = True

except ImportError:

pass

try:

import timm

import warnings

warnings.filterwarnings("ignore", category=FutureWarning, module="timm.models.layers")

TIMM_AVAILABLE = True

except ImportError:

pass

def main():

"""Main canvas for user interaction."""

if not TORCH_AVAILABLE or not DIFFUSERS_AVAILABLE or not TIMM_AVAILABLE:

return

input_image_path = input("Enter the path to the input image: ").strip()

if not os.path.exists(input_image_path):

return

content_prompt = input("Enter content keywords (e.g., 'A beautiful landscape'): ").strip()

style_prompt = input("Enter style keywords (e.g., 'in the style of a woodcut.'): ").strip()

output_dir = os.path.dirname(input_image_path)

variants = generate_variants(input_image_path, content_prompt, style_prompt, output_dir)

# Display the generated variants

if variants:

fig, axes = plt.subplots(1, 4, figsize=(16, 4))

for ax, variant_path in zip(axes, variants):

ax.imshow(Image.open(variant_path))

ax.axis("off")

plt.show()

def generate_variants(input_image_path, content_prompt, style_prompt, output_dir):

"""Generates 4 variants of the input image based on content and style prompts."""

os.makedirs(output_dir, exist_ok=True)

try:

# Load the model

pipe = StableDiffusionDepth2ImgPipeline.from_pretrained(

"stabilityai/stable-diffusion-2-depth",

torch_dtype=torch.float16,

).to("cuda")

except Exception:

return []

input_image = Image.open(input_image_path)

# Generate 4 variants

variants = []

for i in range(4):

try:

result = pipe(

prompt=f"{content_prompt}, {style_prompt}",

image=input_image,

strength=0.8,

)

output_path = os.path.join(output_dir, f"variant_{i + 1}.png")

result.images[0].save(output_path)

variants.append(output_path)

except Exception:

break

return variants

if __name__ == "__main__":

main()

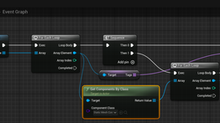

If running it from IDLE, you'll see a path field for the input image, and then the image generating in steps as shown below.

The first image in this post, above, was the input image for testing.

The images below are outputs from the python script:

Specially prompting for medieval colours:

It may be worth trying to specify different diffusers that are more recent and powerful. But the steps needed to get these to work may be annoying. Look up https://huggingface.co/models?pipeline_tag=image-to-image&sort=trending In the python script, it's Image to Image, so bear that in mind when choosing an alternative diffuser model.

Clicking 'Use this model' pops up some info on what the python needs to have to specify the desired model.

Comments